There’s something deeply unsettling about realizing that parts of you might still live on somewhere in a machine. Not your photos. Not your emails. But your thoughts. The things you typed into a chat box at 2:14 a.m. because you were bored, curious, or maybe just a little lonely.

I’ve been using AI chat tools for a while – partly out of interest, partly for testing, and partly because I like to see what’s possible. But recently, I hit a moment where I thought: What if I don’t want to be part of this anymore? What if I want Grok to forget me?

Spoiler alert: it doesn’t work the way you think.

Clicking “Opt Out” Isn’t a Magic Eraser

Grok, for those unfamiliar, is the AI chatbot built into X (formerly known as Twitter). It’s powered by xAI, and it’s increasingly becoming the core of that platform’s AI experience.

There’s a privacy setting on X that allows you to “disable training” on your data. That’s what they call it. It sounds empowering. You untick a box and it feels like you just told the machine, “Stop learning from me.”

Except… it doesn’t forget what it already learned.

All this setting does is prevent future conversations from being used to train Grok further. What you’ve already written? That may still be floating around somewhere, or worse – embedded in the system, influencing how it talks to other people.

It’s like telling someone to stop quoting you after they’ve already memorized your jokes.

Delete Chat History? Sure. But That’s Just the Surface

Yes, there’s an option in the app to delete your previous chats with Grok. You can wipe your conversation history with a tap. It even asks you to confirm, just to make sure you really want to delete those lines of text.

But here’s the catch: you’re only deleting your ability to see those messages.

There’s no public guarantee that the back-end systems wipe that data completely. Or that Grok didn’t already learn from your wording, your tone, or your phrasing. In fact, machine learning models don’t store data the same way humans do – they extract patterns from it. So even if the text is gone, its influence might still remain.

Think of it this way: if you crush a flower, the scent might linger. Data is like that.

Data Deletion Policies Sound Reassuring – Until You Read the Fine Print

xAI says they automatically delete chats from Private Mode after 30 days – unless they need to retain them “for security or legal reasons.” They say they’ll delete user data when accounts are closed. Sounds reasonable.

But here’s the problem: the “unless” clause.

That tiny word – “unless” – is doing a lot of heavy lifting. It opens the door for exceptions. It tells you that your data is probably gone… unless someone at some point decided it needed to stay a little longer.

That’s not exactly comforting.

And of course, the more broadly Grok is integrated across products, the harder it becomes to know where your data might have ended up.

You Can Ask to Be Forgotten, But Can You Ever Be Unlearned?

Let’s say you go all the way: delete your account, clear your chat history, and even email support at xAI asking to be removed from all systems.

You might get a response. You might not.

Even if they honor the request, there’s one massive problem no one wants to talk about: machine learning models don’t forget the way we do. They don’t hold onto your text like a journal. They turn your input into weight updates – adjustments in billions of neural connections.

And once that’s done, it’s not like they can “extract you” from the equation. Your influence is part of the system now. Tiny. Untraceable. But still there.

You’re no longer a sentence. You’re a ripple.

So What Can You Actually Do?

I don’t want to sound hopeless – but I also don’t want to pretend that you can just vanish from an AI system by toggling a setting.

Here’s what I’ve started doing instead:

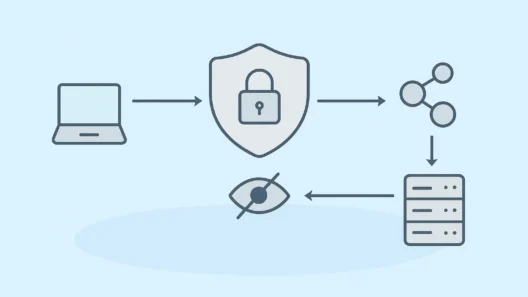

- I no longer feed any personal information into AI tools. No names, no dates, no anecdotes I wouldn’t share publicly.

- I run all my devices through VPNs to add an extra layer of privacy, especially when using AI tools tied to social platforms.

- I regularly check my privacy settings, even if I know they’re probably not enough.

And most importantly – I talk about this. Loudly. Because the more we stay silent, the more comfortable these systems become with keeping us inside them forever.

Privacy Isn’t a Checkbox. It’s a Mindset.

You don’t just “protect your privacy” once and call it a day. Have to keep asking questions. You have to be uncomfortable sometimes. And you have to understand that deleting something doesn’t always mean it’s gone.

I tried to erase myself from Grok. I learned a lot. Mostly, I learned how far we still are from actual control over our digital lives.

But I’m not giving up. I’ll keep pushing. Keep testing. Keep deleting.

And in the meantime, I’ll be using my VPN, watching for loopholes, and writing about every strange thing I find.

Because maybe the most powerful thing we can do is simply refuse to be quiet.

By the way, if you’re looking for a simple way to automate data deletion requests, I’ve also tried Incogni – and honestly, it’s a pretty solid service. You give them permission once, and they start contacting data brokers on your behalf. It’s like having a privacy assistant that actually gets things done while you focus on life (or your next VPN test).

Definitely worth checking out if you don’t feel like emailing twenty companies yourself.